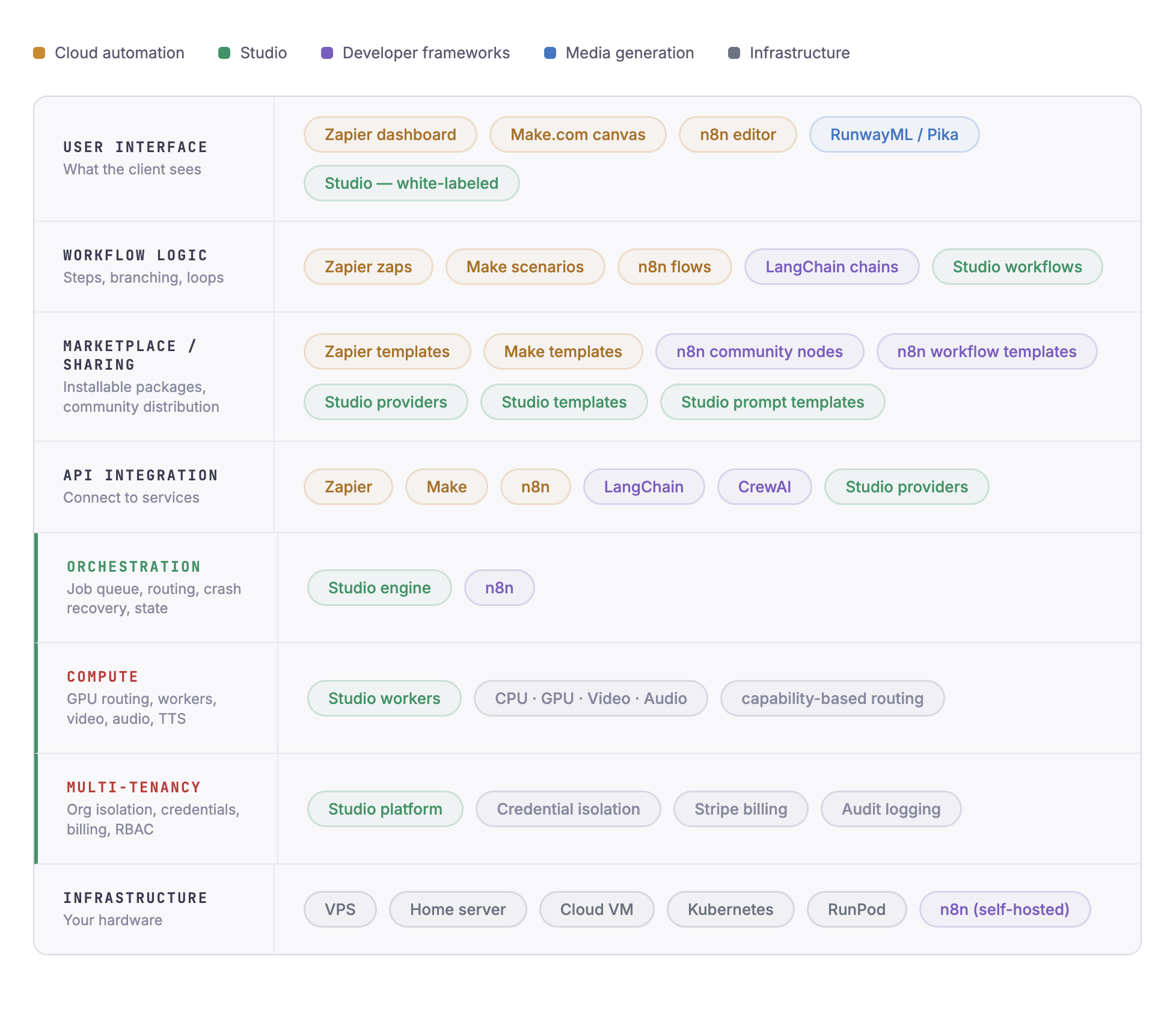

Own the execution layer.

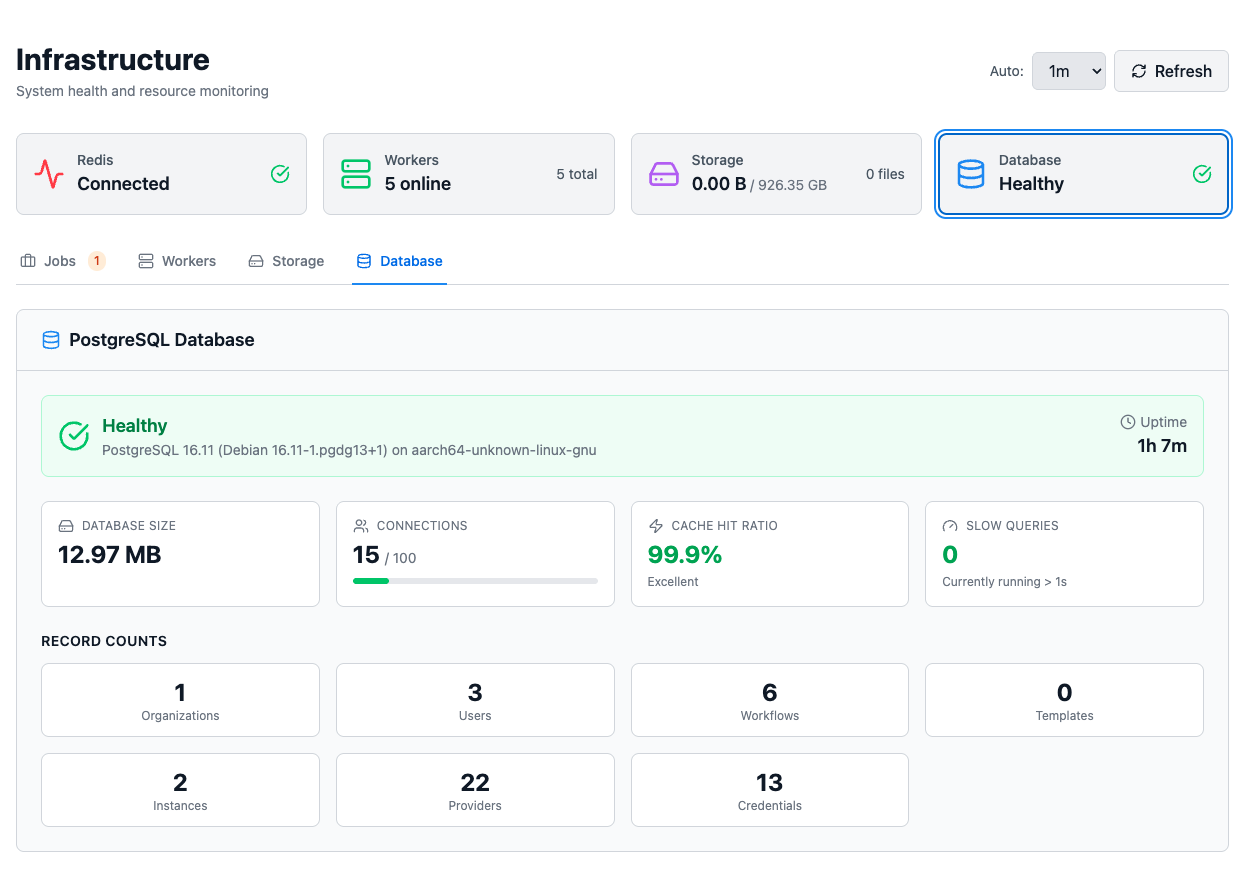

Stop renting your AI workflow infrastructure.

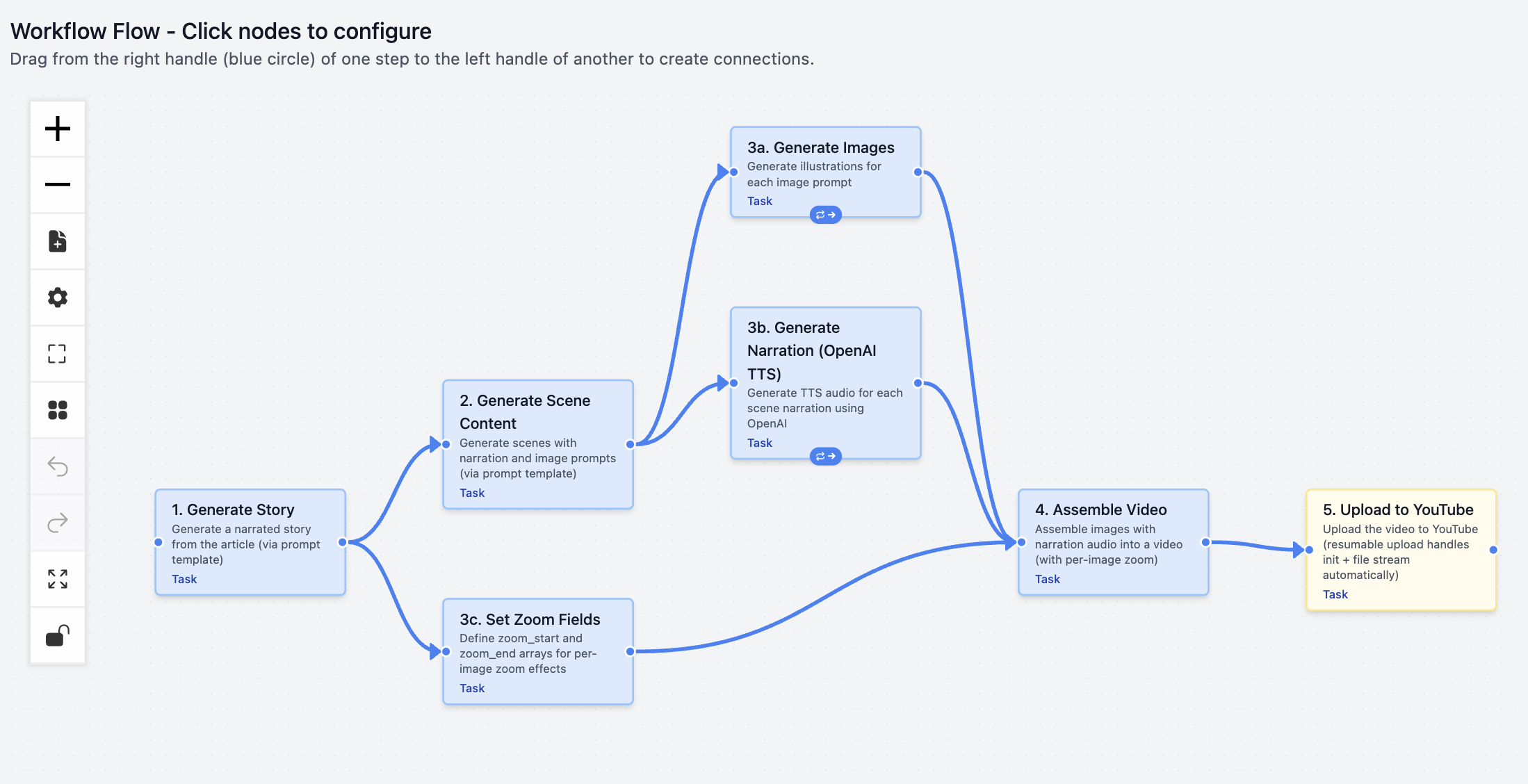

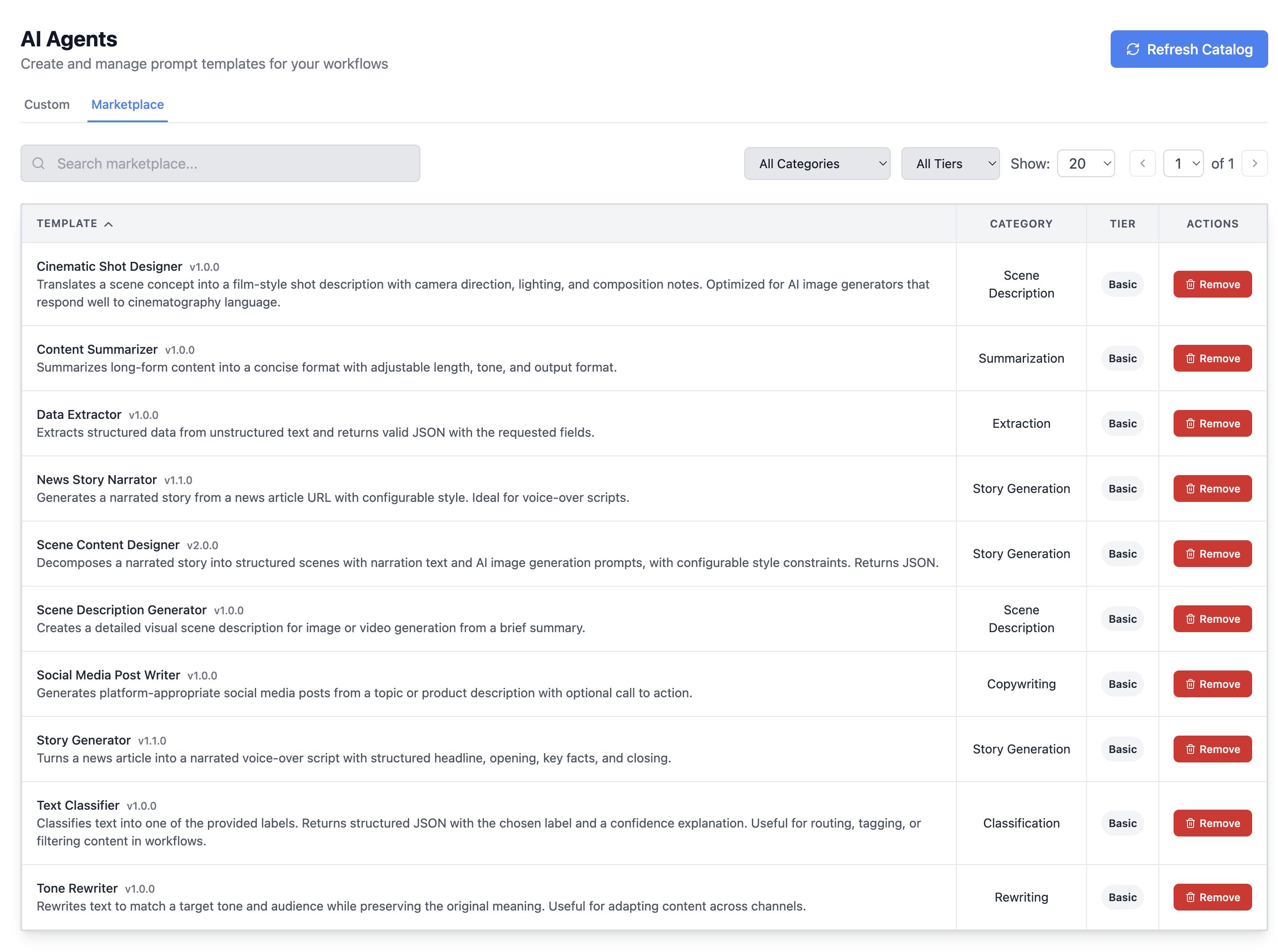

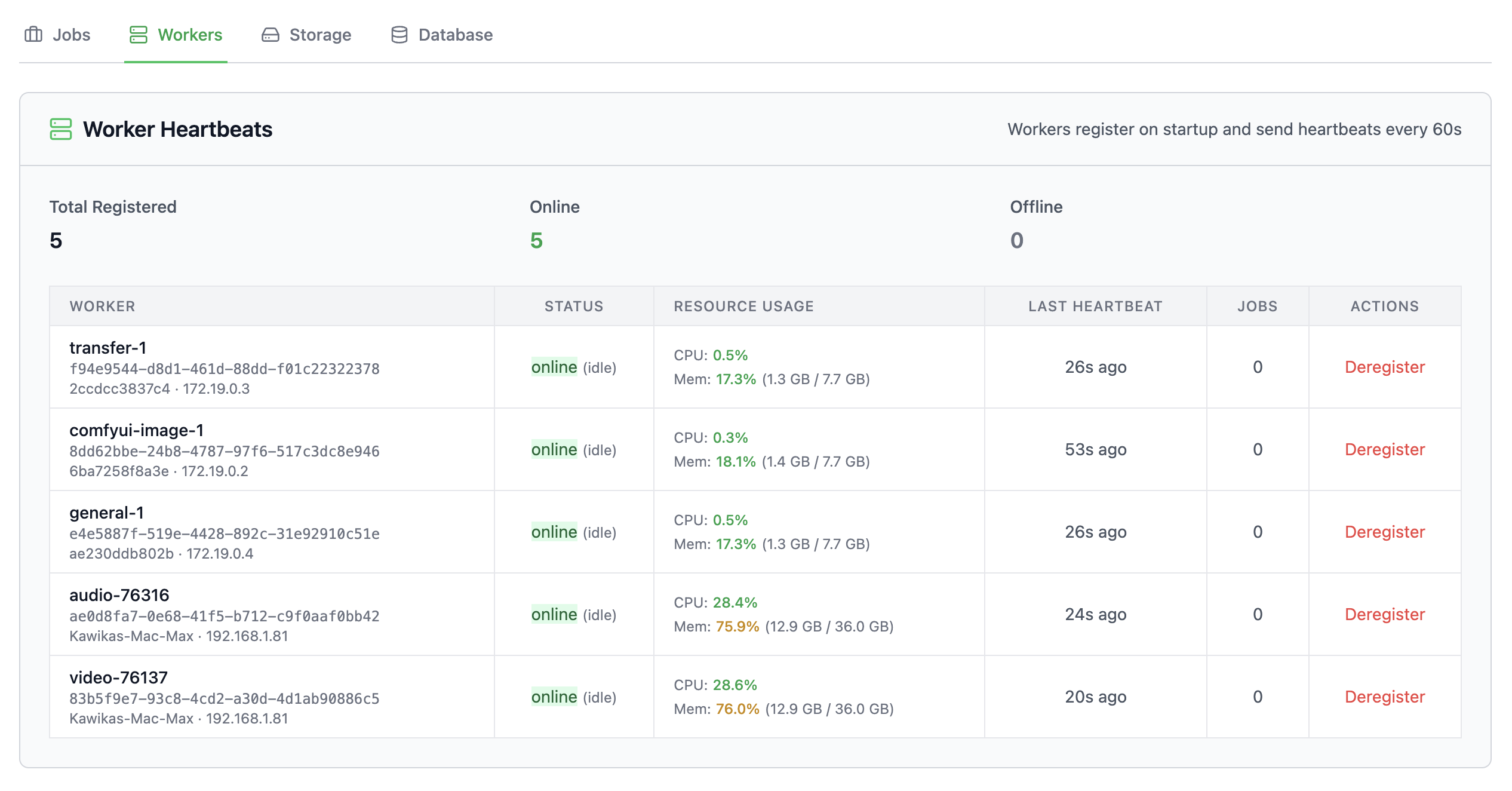

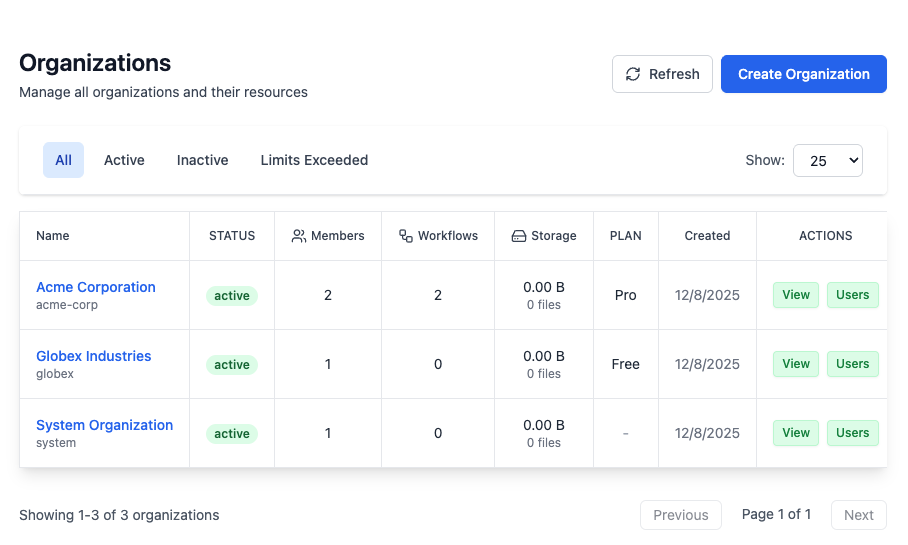

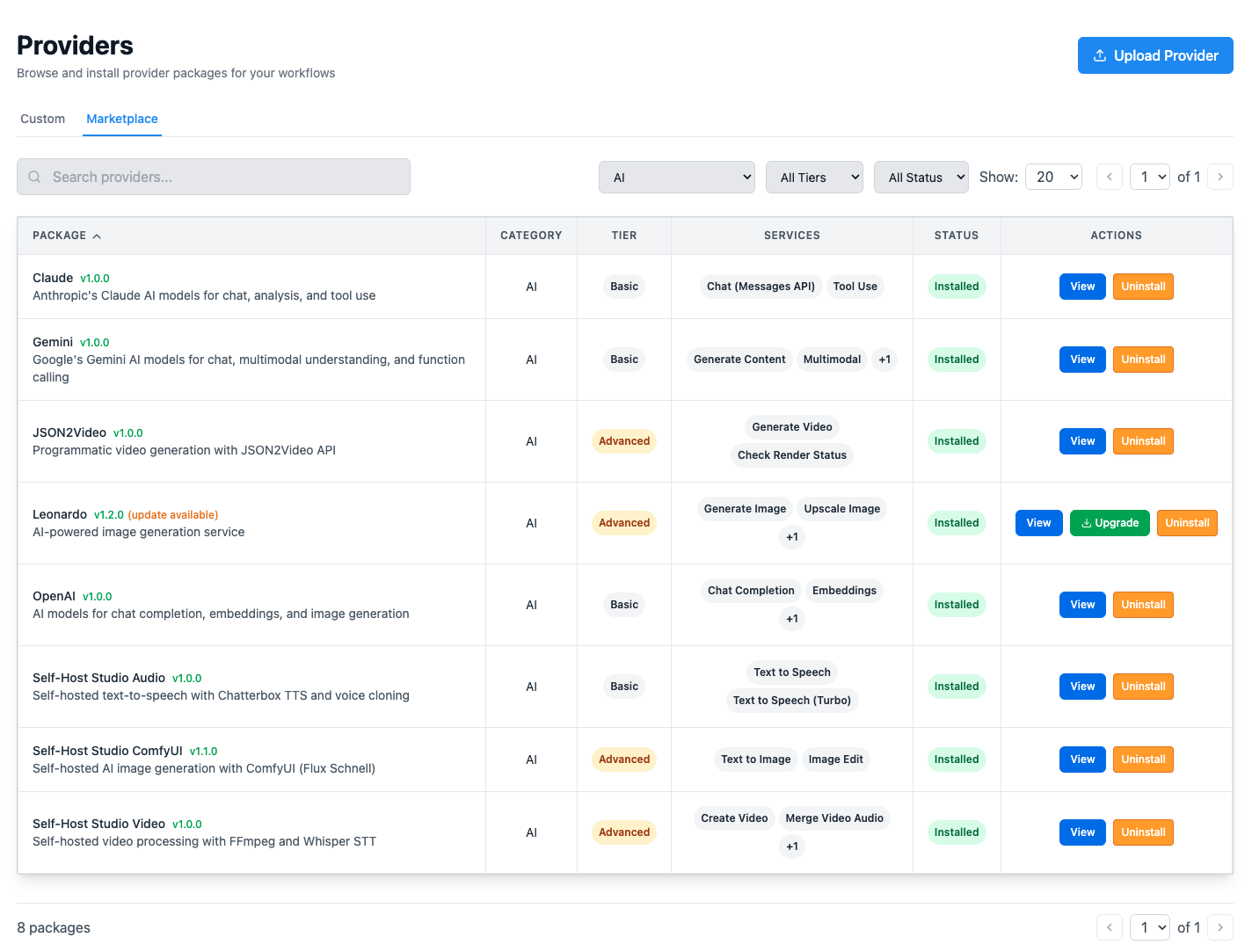

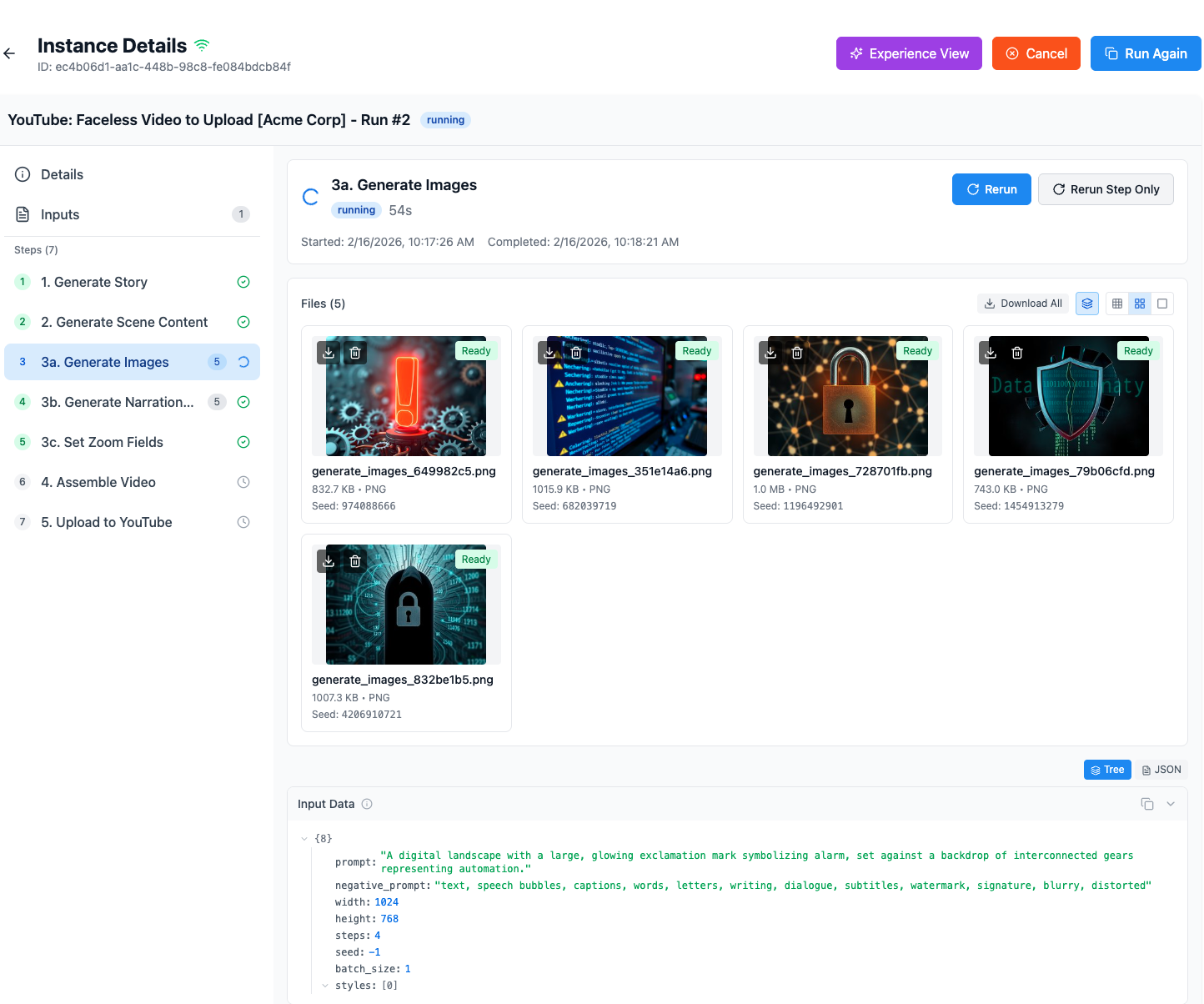

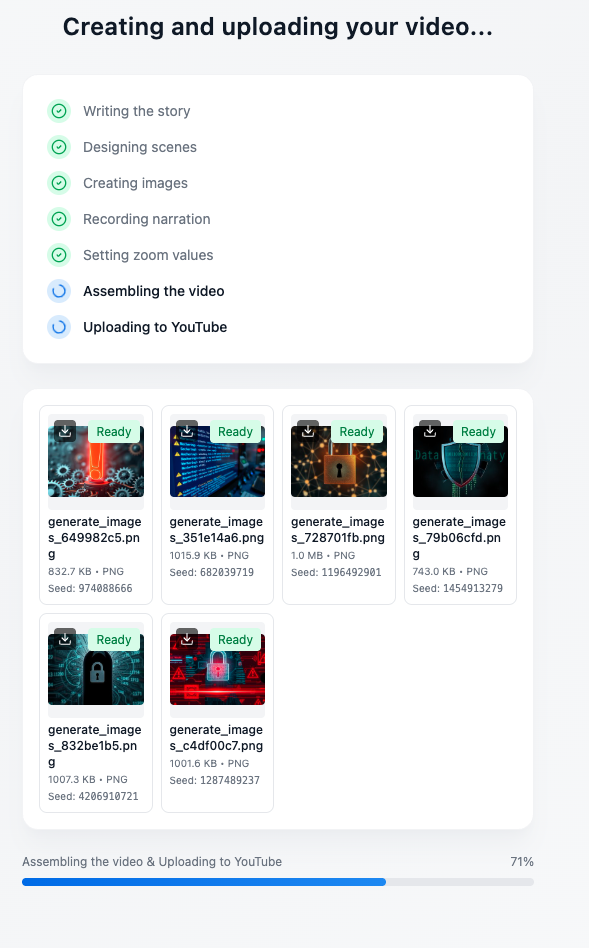

Studio is self-hosted execution infrastructure for your AI pipelines. Orchestrate LLMs, GPU compute, video rendering, and delivery — on hardware you control. Unlimited runs. No per-operation fees. No platform lock-in.